Profiling, Storing and Monitoring HPC Communication Data at Scale by OSU INAM

Monday, May 13, 2024 3:00 PM to Wednesday, May 15, 2024 4:00 PM · 2 days 1 hr. (Europe/Berlin)

Foyer D-G - 2nd floor

Research Poster

Resource Management and SchedulingRuntime Systems for HPCSystem and Performance Monitoring

Information

Poster is on display and will be presented at the poster pitch session.

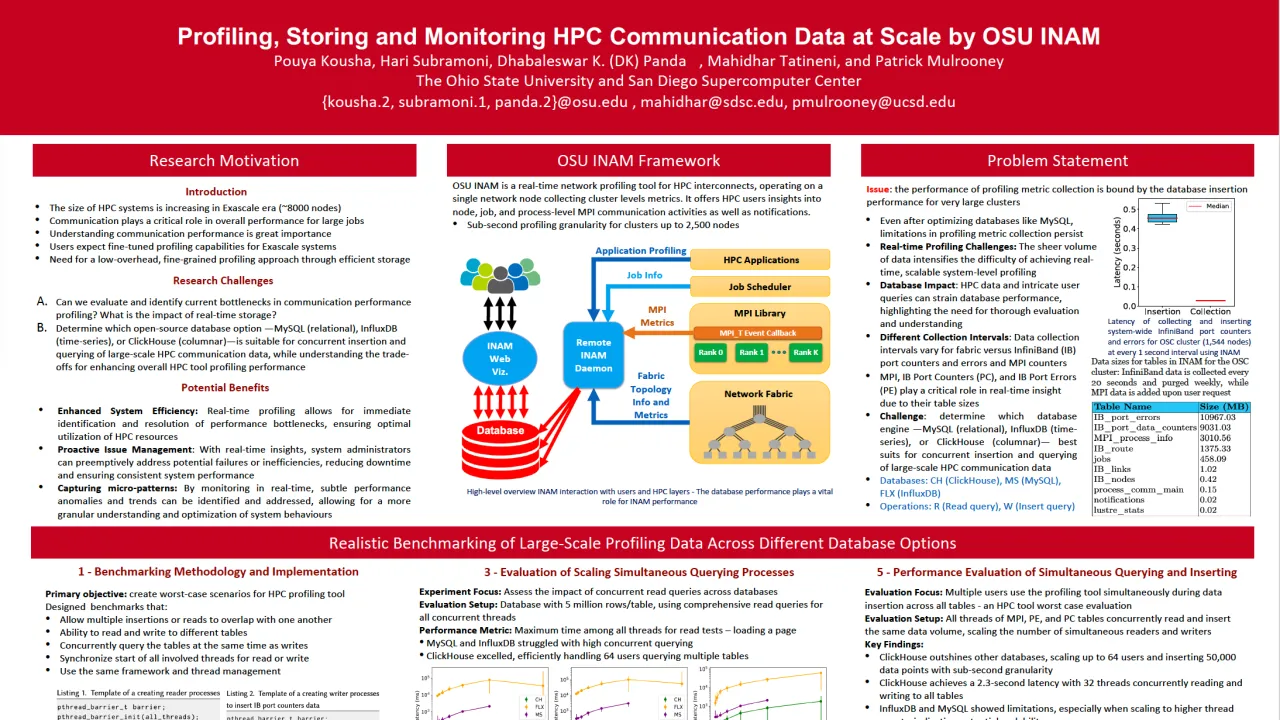

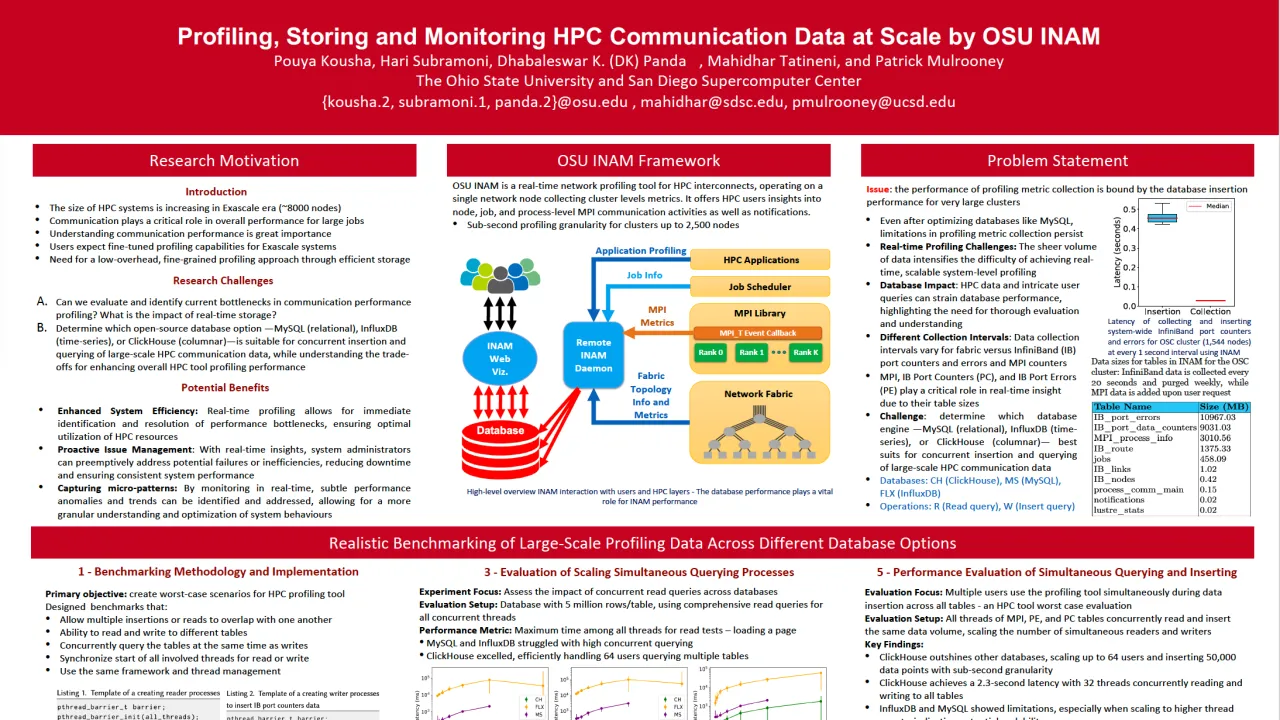

Capturing cross-stack profiling of communication on HPC systems at fine granularity is critical for gaining insights into the detailed performance trade-offs and interplay among various components of HPC ecosystem. To enable this, one needs to be able to collect, store, and retrieve system-wide data at high fidelity. As modern HPC systems expand, ensuring high-fidelity, real-time communication profiling becomes more challenging, especially with the growing number of users employing profiling tools to monitor their workloads. We take on this challenge in this poster and identify the key metrics of performance that makes a database amenable to these needs. We then design benchmarks to measure and understand the performance of multiple, popular, open-source databases. Through comprehensive experimental analysis, we demonstrate the performance and scalability trends of the selected databases to perform different types of fundamental storage and retrieval operations under various conditions. Then, we integrate our findings into OSU INAM. Finally, we show case a real world usage of INAM for SDSC systems to monitor and resolve performance issues on their network using the enhanced profiling capabilities as a result of this work. Through this work, we are able to achieve sub-second complex data querying serving up to 64 users and demonstrate a ``9x'' improvement in insertion latency through parallel data insertion, achieving a latency of 55 ms and 50% less disk space for inserting 200,000 rows of profiling data collected from a potential system that is ``4x'' the size of the state-of-the-art 19th-ranked Frontera supercomputing system at TACC with 8,368 nodes.

Contributors:

Capturing cross-stack profiling of communication on HPC systems at fine granularity is critical for gaining insights into the detailed performance trade-offs and interplay among various components of HPC ecosystem. To enable this, one needs to be able to collect, store, and retrieve system-wide data at high fidelity. As modern HPC systems expand, ensuring high-fidelity, real-time communication profiling becomes more challenging, especially with the growing number of users employing profiling tools to monitor their workloads. We take on this challenge in this poster and identify the key metrics of performance that makes a database amenable to these needs. We then design benchmarks to measure and understand the performance of multiple, popular, open-source databases. Through comprehensive experimental analysis, we demonstrate the performance and scalability trends of the selected databases to perform different types of fundamental storage and retrieval operations under various conditions. Then, we integrate our findings into OSU INAM. Finally, we show case a real world usage of INAM for SDSC systems to monitor and resolve performance issues on their network using the enhanced profiling capabilities as a result of this work. Through this work, we are able to achieve sub-second complex data querying serving up to 64 users and demonstrate a ``9x'' improvement in insertion latency through parallel data insertion, achieving a latency of 55 ms and 50% less disk space for inserting 200,000 rows of profiling data collected from a potential system that is ``4x'' the size of the state-of-the-art 19th-ranked Frontera supercomputing system at TACC with 8,368 nodes.

Contributors:

Format

On-site