CraneSched: A High Performance Open-Source Intelligent Task Schedule System for Supercomputing Clusters

Monday, May 13, 2024 3:00 PM to Wednesday, May 15, 2024 4:00 PM · 2 days 1 hr. (Europe/Berlin)

Foyer D-G - 2nd floor

Project Poster

Resource Management and Scheduling

Information

Poster is on display.

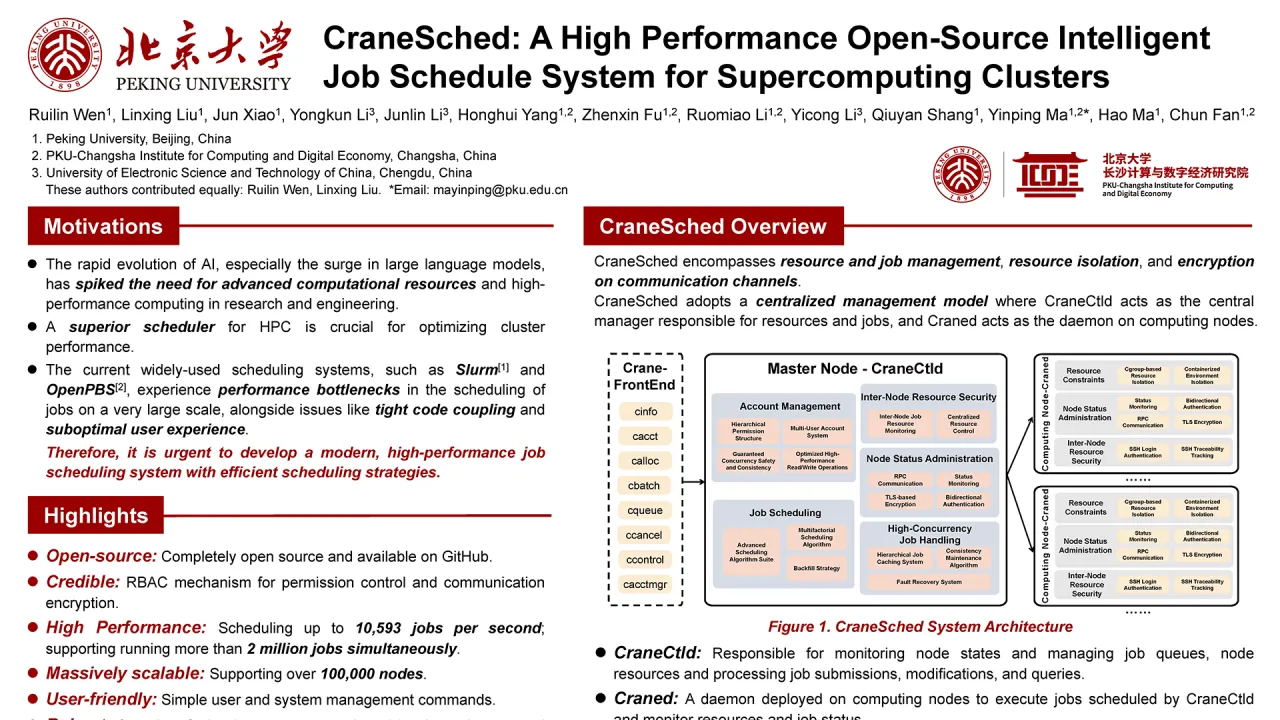

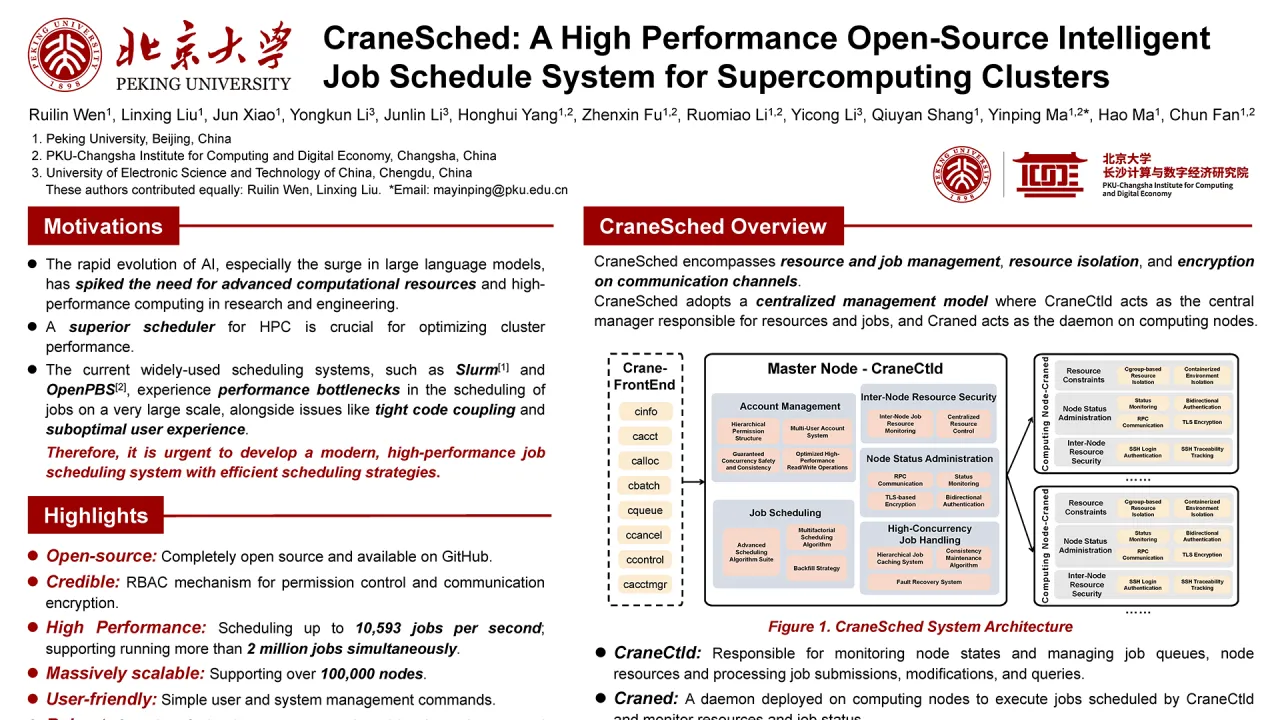

We design a high-performance, open-source task scheduling system for supercomputing clusters which names CraneSched. The system is designed to address the escalating demands of advanced computational resources, especially in the context of large-scale AI and high-performance computing. CraneSched stands out for its superior scheduling capabilities compared to widely-used systems like Slurm and OpenPBS, which face performance bottlenecks, particularly in large-scale task scheduling. Key features of CraneSched include its open-source nature, credible security measures like RBAC (Role-Based Access Control) and communication encryption, supports scheduling approximately 10,593 jobs per second and running more than 2 million jobs simultaneously, scalability to support over 100,000 nodes, user-friendliness, and robustness with automatic recovery and no single point of failure. The system architecture comprises CraneCtld as the central manager and Craned as the daemon on each computing node. CraneSched ensures high performance through optimized read/write operations, hierarchical job caching system, and advanced scheduling algorithms. The system's performance was evaluated using a practical supercomputing cluster with 132 nodes and further simulated on a larger scale with 100,000 virtual nodes created using Mininet. Additionally, a comparative analysis with Slurm was conducted using a smaller 4-node cluster, generating 3,000 virtual nodes to facilitate concurrent operation and comprehensive evaluation of both systems. CraneSched demonstrates significant performance improvements in various aspects, particularly in job queue concurrency, system efficiency, job throughput, and fault recovery mechanisms. This positions CraneSched as an advantageous alternative to current supercomputing scheduling systems, promising ongoing development to further enhance high-performance computing.

Contributors:

We design a high-performance, open-source task scheduling system for supercomputing clusters which names CraneSched. The system is designed to address the escalating demands of advanced computational resources, especially in the context of large-scale AI and high-performance computing. CraneSched stands out for its superior scheduling capabilities compared to widely-used systems like Slurm and OpenPBS, which face performance bottlenecks, particularly in large-scale task scheduling. Key features of CraneSched include its open-source nature, credible security measures like RBAC (Role-Based Access Control) and communication encryption, supports scheduling approximately 10,593 jobs per second and running more than 2 million jobs simultaneously, scalability to support over 100,000 nodes, user-friendliness, and robustness with automatic recovery and no single point of failure. The system architecture comprises CraneCtld as the central manager and Craned as the daemon on each computing node. CraneSched ensures high performance through optimized read/write operations, hierarchical job caching system, and advanced scheduling algorithms. The system's performance was evaluated using a practical supercomputing cluster with 132 nodes and further simulated on a larger scale with 100,000 virtual nodes created using Mininet. Additionally, a comparative analysis with Slurm was conducted using a smaller 4-node cluster, generating 3,000 virtual nodes to facilitate concurrent operation and comprehensive evaluation of both systems. CraneSched demonstrates significant performance improvements in various aspects, particularly in job queue concurrency, system efficiency, job throughput, and fault recovery mechanisms. This positions CraneSched as an advantageous alternative to current supercomputing scheduling systems, promising ongoing development to further enhance high-performance computing.

Contributors:

Format

On-site