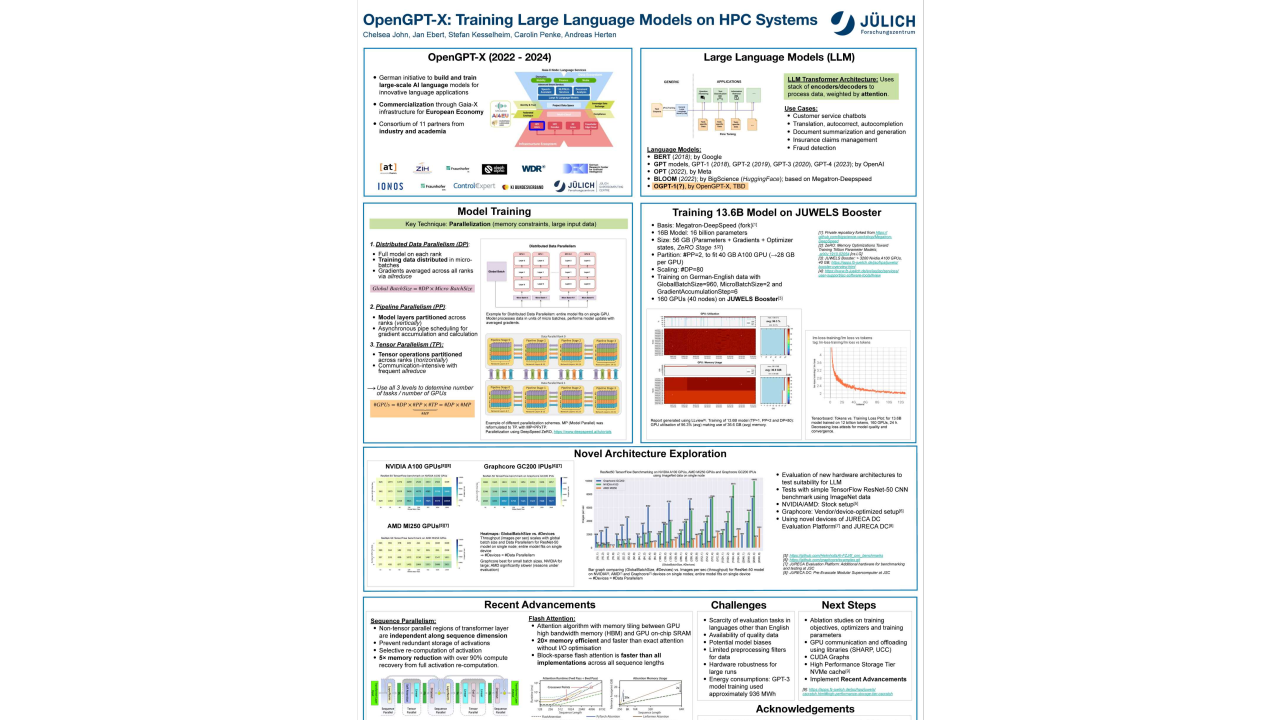

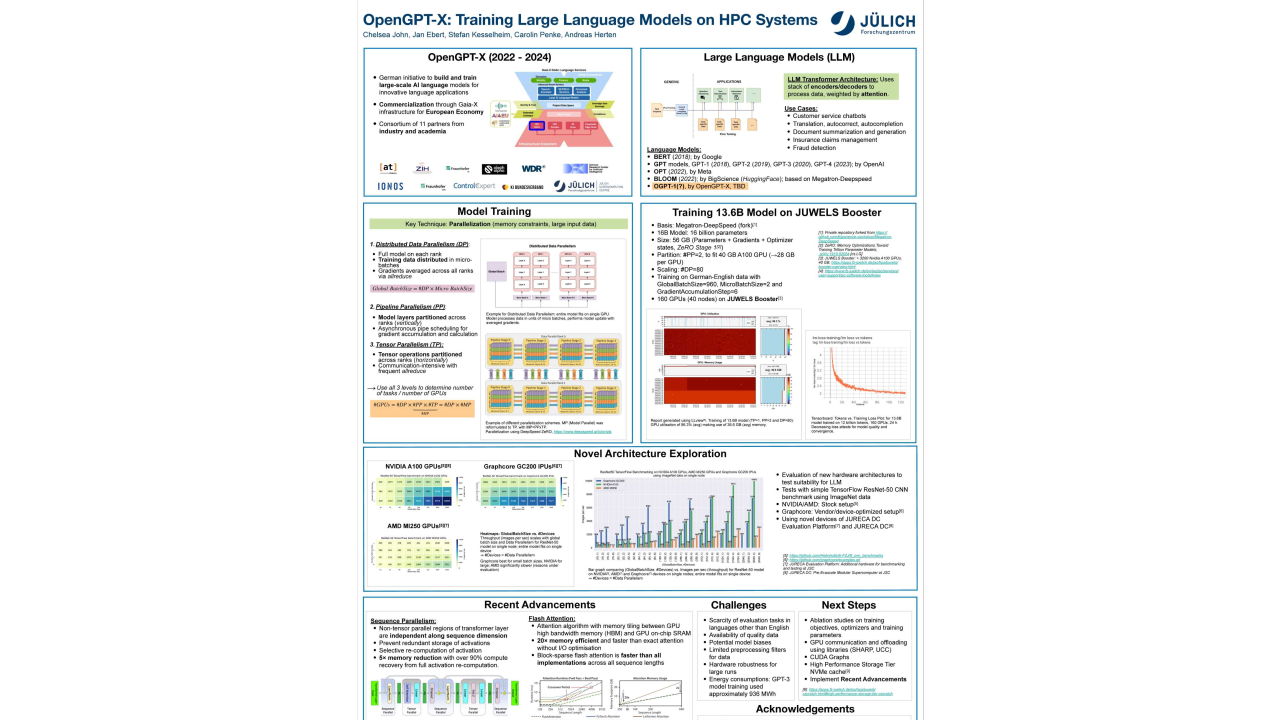

OpenGPT-X: Training Large Language Models on HPC Systems

Monday, May 22, 2023 3:00 PM to Wednesday, May 24, 2023 5:00 PM · 2 days 2 hr. (Europe/Berlin)

Foyer D-G - 2nd Floor

Project Poster

AI ApplicationsEmerging HPC Processors and AcceleratorsHPC Workflows

Information

OpenGPT-X is a German initiative to build and train large language models (LLMs). The project aims at providing an open alternative to LLMs which are up to now private property, along with a platform for researching methods to train multilingual LLMs efficiently. For that, the project not only utilizes the state-of-the-art in training models but also incorporates new methods, algorithms, and tools. Models trained within the project will be published and used for pilot language services by industry partners. In addition, further applications are expected through Gaia-X federation. LLMs can scale to more than 175 Billion parameters, which requires efficient usage of supercomputers like JUWELS Booster. Especially in the light of the recent successes of ChatGPT, our work clearly indicates that the infrastructure of supercomputing centres and initiatives aiming to provide resources to the public can have a large societal impact. This poster outlines the initial progress and future work of the project from Jülich Supercomputing Center (JSC).

Contributors:

Contributors:

Format

On-site

Beginner Level

70%

Intermediate Level

30%