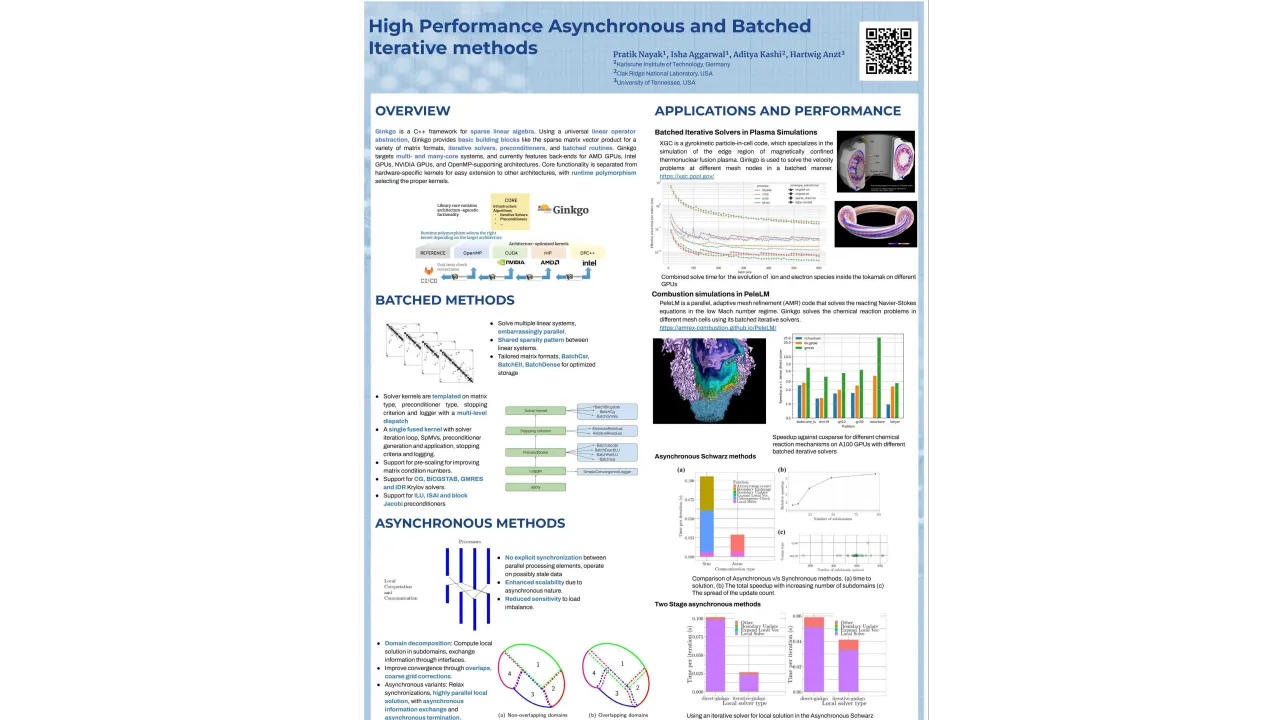

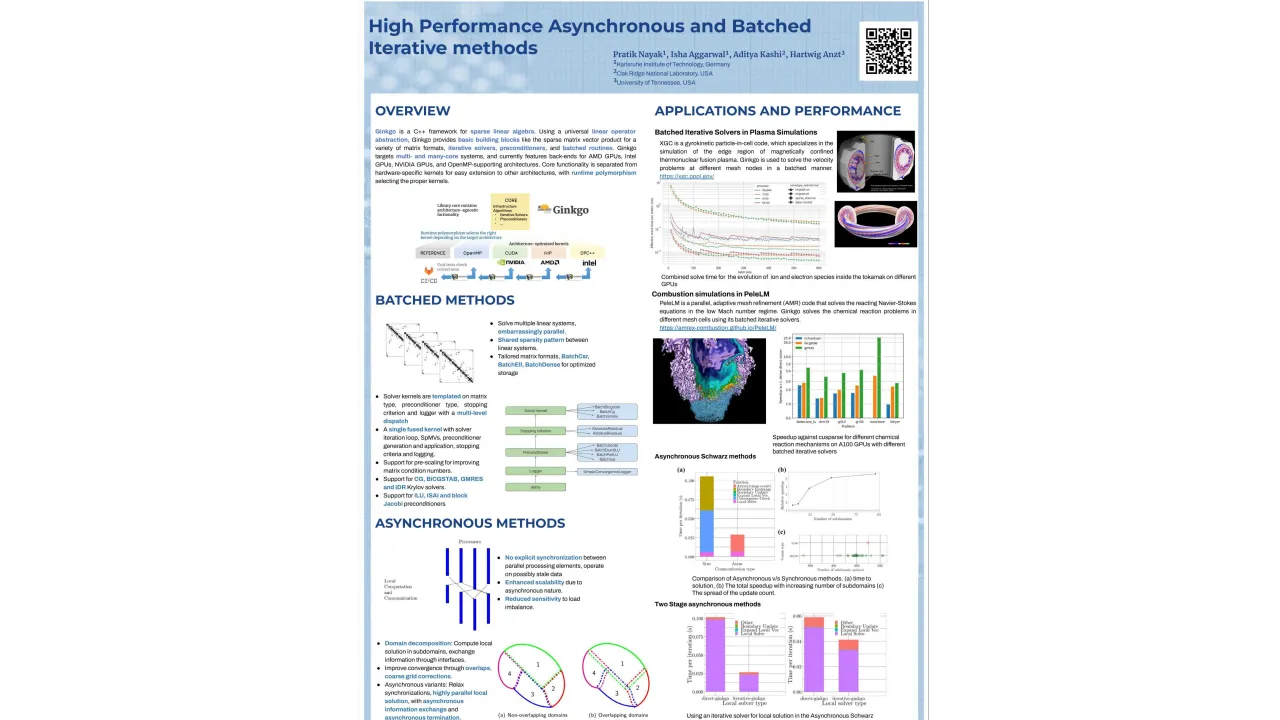

High Performance Asynchronous and Batched Iterative Methods

Monday, May 22, 2023 3:00 PM to Wednesday, May 24, 2023 5:00 PM · 2 days 2 hr. (Europe/Berlin)

Foyer D-G - 2nd Floor

Research Poster

Managing Extreme-Scale ParallelismNumerical Libraries

Information

Scaling to thousands of computing units across thousands of nodes requires efficient utilization of the hierarchical parallelism, in addition to minimizing the need to synchronize parallel processing elements. We showcase the usage of two techniques; batched methods, which maximize the resource utilization, and synchronization free methods, which relax data dependencies, enabling high performance on a single GPU, to multiple GPUs across multiple nodes.

Within our open-source numerical linear algebra library, Ginkgo, we have implemented batched sparse iterative methods for GPUs. These methods aim to solve a large number of linear systems, sharing a sparsity pattern, in parallel, with optimized kernels utilizing kernel techniques such as kernel fusion and templating to minimize the data movement and maximize device occupancy. We also showcase two different applications where these batched methods have provided speedups (average of 4x for the Plasma simulation application and an average of 6x for the combustion simulation application).

Using Ginkgo, we have also implemented asynchronous variants of the Schwarz domain decomposition methods, demonstrating the benefits of relaxed synchronization even in problems having good load balance characteristics. Taking the Laplacian problem and the advection problem as test cases, we also demonstrated the benefits of a two stage approach, utilizing iterative solvers with tuned accuracy for the solution of the subdomain local problems.

Contributors:

Contributors:

Format

On-site

Beginner Level

40%

Intermediate Level

40%

Advanced Level

20%