LLM-Powered Helpdesk Support: Building an LLM Assistant to Suggest Ticket Responses

Tuesday, June 10, 2025 3:00 PM to Thursday, June 12, 2025 4:00 PM · 2 days 1 hr. (Europe/Berlin)

Foyer D-G - 2nd floor

Project Poster

AI Applications powered by HPC TechnologiesLarge Language Models and Generative AI in HPC

Information

Poster is on display.

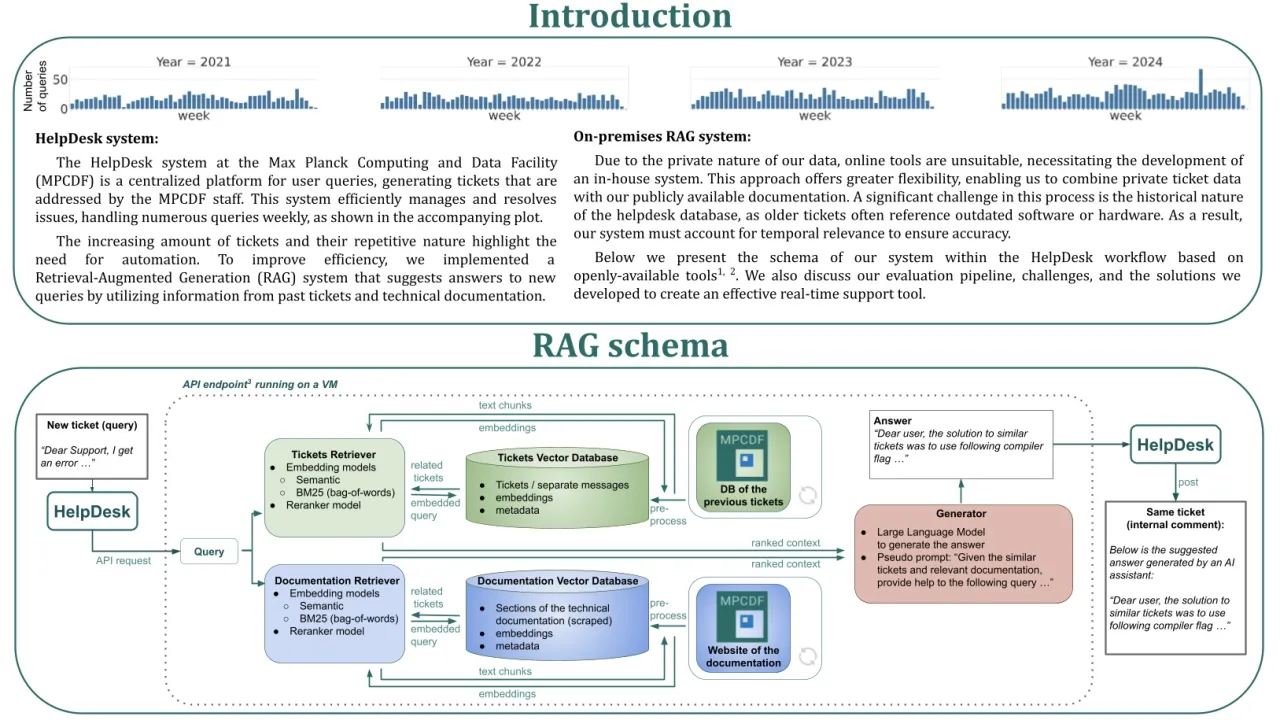

The HelpDesk system at the Max Planck Computing and Data Facility serves as a centralized platform for efficiently managing user queries by generating tickets addressed by support staff. The increasing amount of tickets and their repetitive nature highlights the need to automate processes, thereby improving efficiency and reducing staff workload. We developed a Retrieval-Augmented Generation (RAG) system to suggest responses to new queries by leveraging information from past tickets and technical documentation.

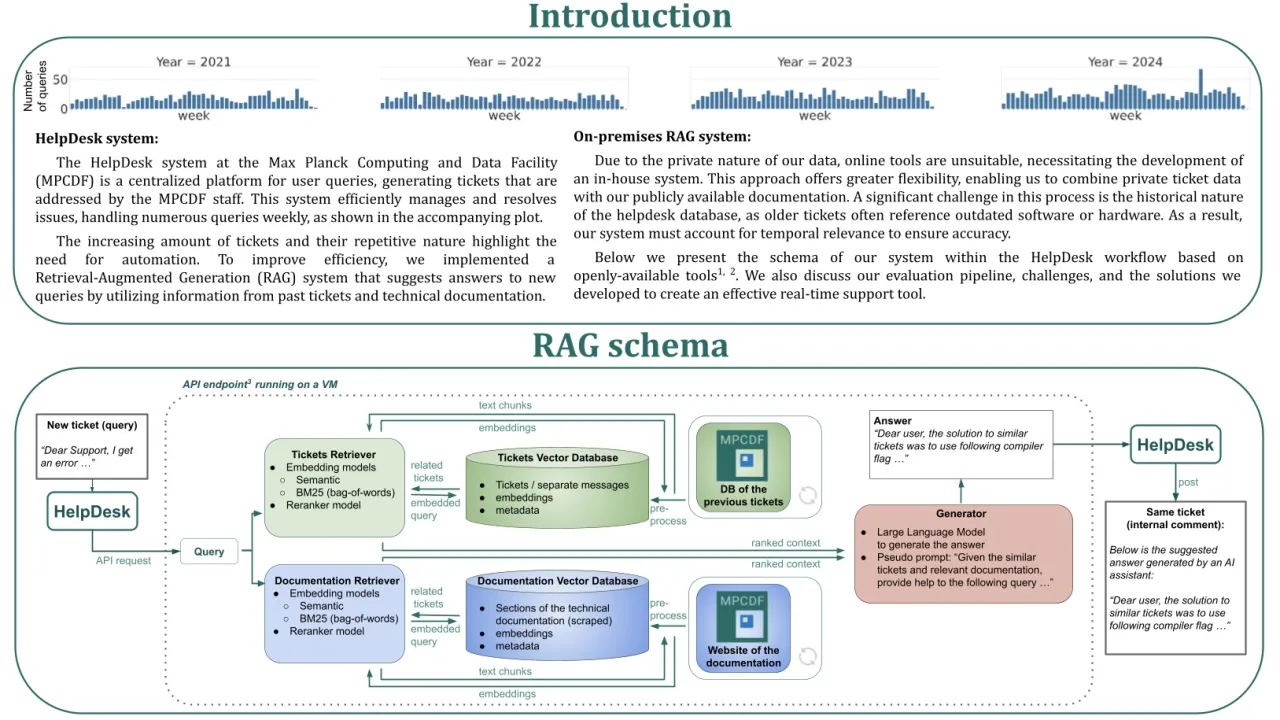

Due to the private nature of the data, online tools are unsuitable, necessitating the development of an in-house solution. This approach ensures data privacy and offers greater flexibility, enabling us to combine private ticket data with our publicly available documentation. A key challenge in building the system is the historical nature of the ticket database, as older tickets often reference outdated software or hardware. To address this, the system incorporates temporal filtering to ensure the accuracy and relevance of responses.

As an initial solution, a semantic search tool hosted on a virtual machine was deployed, allowing staff to retrieve semantically similar tickets to a given query. Current efforts focus on integrating the full RAG system directly into the HelpDesk workflow. When a user creates a new ticket, a request is sent to the RAG system’s API, which generates a suggested answer. The generated suggestion is then posted as an internal comment on the ticket, visible only to the staff, streamlining the resolution process.

The evaluation of the system involved separate assessments of the retriever and generator components. To evaluate the retriever, we generated a ground-truth dataset by creating synthetic queries from existing tickets, using the original tickets as relevant context. Retriever performance was measured using the Hit Rate, quantifying the proportion of queries for which relevant tickets were retrieved. For the generator evaluation, we manually curated question-answer pairs from the ticket database, selecting tickets that were straightforward and well-resolved. A two-phase evaluation process was employed: initial automated screening using an LLM agent to compare generated responses with staff-provided answers, followed by human evaluation using the Argilla tool to determine preferences between top-performing models.

This work details our experience designing, implementing, and evaluating a RAG-powered HelpDesk assistant. The system enhances efficiency by automating repetitive tasks while maintaining strict data privacy and adapting to domain-specific challenges. These findings provide valuable insights into building effective AI-powered tools for real-time support workflows.

Contributors:

The HelpDesk system at the Max Planck Computing and Data Facility serves as a centralized platform for efficiently managing user queries by generating tickets addressed by support staff. The increasing amount of tickets and their repetitive nature highlights the need to automate processes, thereby improving efficiency and reducing staff workload. We developed a Retrieval-Augmented Generation (RAG) system to suggest responses to new queries by leveraging information from past tickets and technical documentation.

Due to the private nature of the data, online tools are unsuitable, necessitating the development of an in-house solution. This approach ensures data privacy and offers greater flexibility, enabling us to combine private ticket data with our publicly available documentation. A key challenge in building the system is the historical nature of the ticket database, as older tickets often reference outdated software or hardware. To address this, the system incorporates temporal filtering to ensure the accuracy and relevance of responses.

As an initial solution, a semantic search tool hosted on a virtual machine was deployed, allowing staff to retrieve semantically similar tickets to a given query. Current efforts focus on integrating the full RAG system directly into the HelpDesk workflow. When a user creates a new ticket, a request is sent to the RAG system’s API, which generates a suggested answer. The generated suggestion is then posted as an internal comment on the ticket, visible only to the staff, streamlining the resolution process.

The evaluation of the system involved separate assessments of the retriever and generator components. To evaluate the retriever, we generated a ground-truth dataset by creating synthetic queries from existing tickets, using the original tickets as relevant context. Retriever performance was measured using the Hit Rate, quantifying the proportion of queries for which relevant tickets were retrieved. For the generator evaluation, we manually curated question-answer pairs from the ticket database, selecting tickets that were straightforward and well-resolved. A two-phase evaluation process was employed: initial automated screening using an LLM agent to compare generated responses with staff-provided answers, followed by human evaluation using the Argilla tool to determine preferences between top-performing models.

This work details our experience designing, implementing, and evaluating a RAG-powered HelpDesk assistant. The system enhances efficiency by automating repetitive tasks while maintaining strict data privacy and adapting to domain-specific challenges. These findings provide valuable insights into building effective AI-powered tools for real-time support workflows.

Contributors:

Format

On DemandOn Site