Hybrid Black-Box and White-Box Approaches for Efficient Resource Prediction for AI Workloads in High-Performance Computing

Tuesday, June 10, 2025 3:00 PM to Thursday, June 12, 2025 4:00 PM · 2 days 1 hr. (Europe/Berlin)

Foyer D-G - 2nd floor

Project Poster

ML Systems and ToolsPerformance and Resource ModelingPerformance Measurement

Information

Poster is on display.

Integrating artificial intelligence (AI) into scientific workflows has revolutionized discovery and innovation across diverse fields. Machine learning (ML), powered by high-performance computing (HPC), enables researchers to simulate task-specific data and solve complex problems at unprecedented scales. As traditional disciplines increasingly adopt ML, the demand for computational resources has surged, particularly in deep neural network (DNN) training, inference, and data storage. By 2030, AI workloads are projected to triple U.S. data center power consumption. This underscores the need for efficient resource utilization to ensure sustainable AI practices.

An AI/ML cluster analysis over one month revealed significant resource underutilization. GPU utilization peaked at ~50% (mean ~23%), while GPU memory peaked at 77% (mean ~12%). CPU and RAM usage were similarly low, peaking at ~20% (mean ~12% and ~5%, respectively). Inefficiencies stem from poor resource provisioning strategies. For instance, users frequently submit jobs to high-end A100 GPUs instead of suitable V100 nodes and request full nodes with 80 CPUs and 4 GPUs, even when workloads require fewer resources. Such practices result in idle CPUs and GPUs during DNN training workloads. A critical question arises: Can runtime and memory requirements for DNN workloads be accurately estimated to enable efficient resource allocation and support sustainable AI solutions?

Runtime estimation for DNN workloads is challenging, as it is an NP-hard problem influenced by hardware, runtime environments, training data, model complexity, and hyperparameters. While black-box resource estimators provide application-specific predictions, they struggle to generalize to new DNN architectures without substantial training data. Similarly, synthetic data generation can mitigate data scarcity, but ensuring efficiency and realism in emulated data is a significant hurdle. Additionally, understanding the reusability of emulations across diverse configurations remains an open problem.

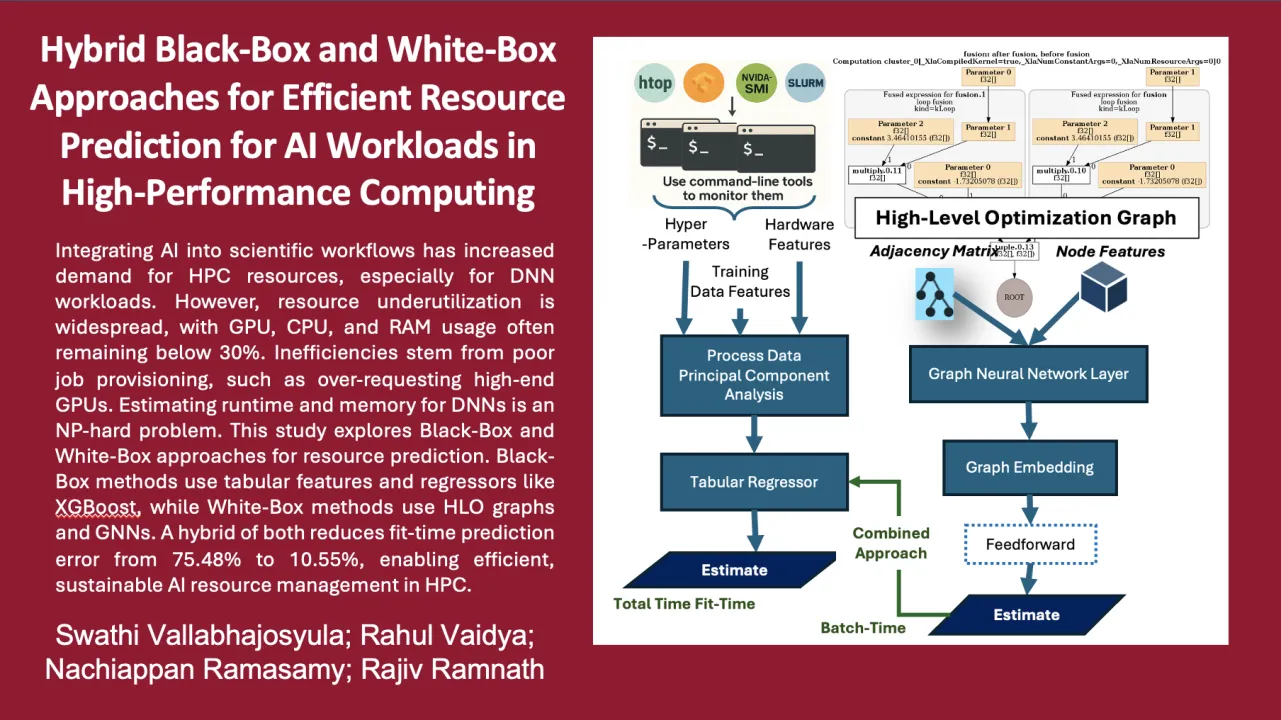

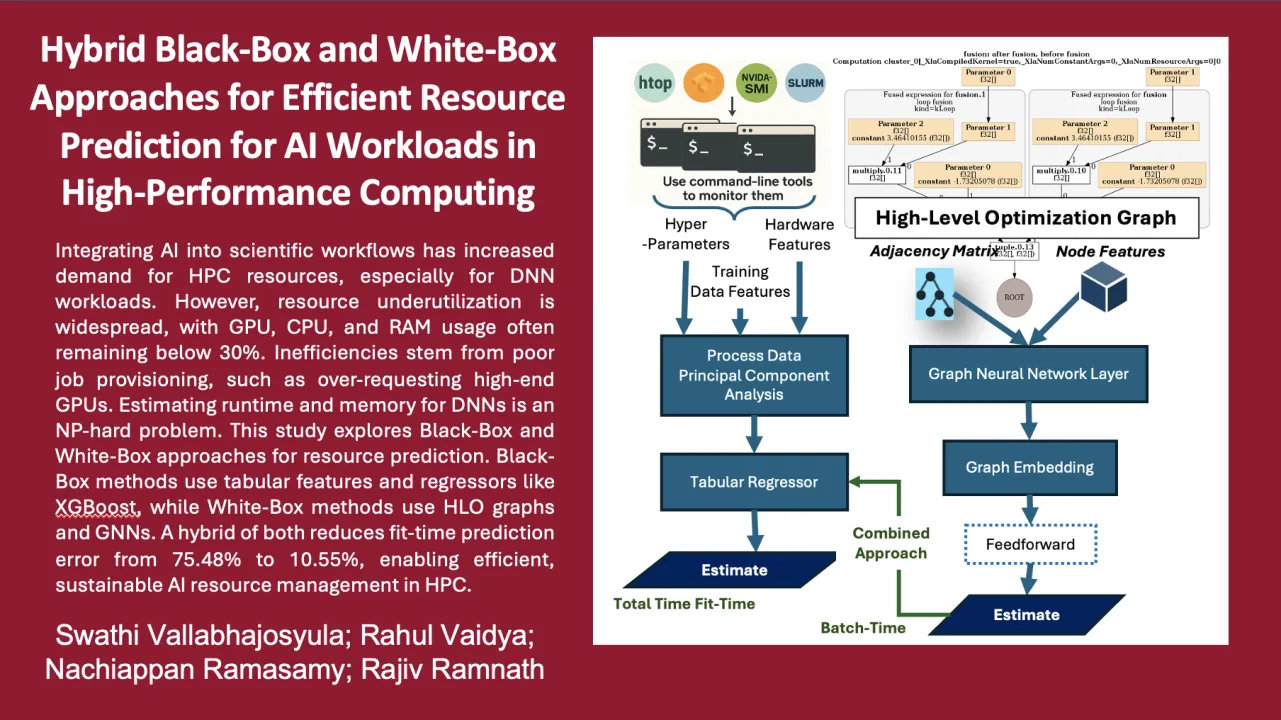

We propose two approaches for DNN resource prediction: Black-Box and White-Box. The Black-Box approach uses tabular features, such as hardware specifications, hyperparameters, input metadata, and handcrafted model features, enabling faster training with traditional regression models. However, it requires extensive training data and does not scale well to novel architectures. In contrast, the White-Box approach extracts graph-based features from High-Level Optimized (HLO) Graphs, eliminating the dependency on handcrafted features. While adaptable to new DNN layers, this approach incurs additional overhead as it requires hardware-based feature extraction during model inference.

Experiments using these approaches demonstrate complementary strengths. When sufficient training data is available, traditional regressors like XGBoost outperform Graph Neural Network (GNN)-based models, achieving a Mean Absolute Percentage Error (MAPE) of 10% compared to GNN's 13.54%. However, in data-limited scenarios, combining GNN output with XGBoost in a pipelined fashion significantly reduces MAPE for fit time estimation from 75.48% to 10.55%. This demonstrates the effectiveness of hybrid approaches in improving prediction accuracy, even with constrained training data. These findings highlight the potential of combining Black-Box and White-Box methodologies for efficient and sustainable resource prediction in AI-driven HPC environments.

Contributors:

Integrating artificial intelligence (AI) into scientific workflows has revolutionized discovery and innovation across diverse fields. Machine learning (ML), powered by high-performance computing (HPC), enables researchers to simulate task-specific data and solve complex problems at unprecedented scales. As traditional disciplines increasingly adopt ML, the demand for computational resources has surged, particularly in deep neural network (DNN) training, inference, and data storage. By 2030, AI workloads are projected to triple U.S. data center power consumption. This underscores the need for efficient resource utilization to ensure sustainable AI practices.

An AI/ML cluster analysis over one month revealed significant resource underutilization. GPU utilization peaked at ~50% (mean ~23%), while GPU memory peaked at 77% (mean ~12%). CPU and RAM usage were similarly low, peaking at ~20% (mean ~12% and ~5%, respectively). Inefficiencies stem from poor resource provisioning strategies. For instance, users frequently submit jobs to high-end A100 GPUs instead of suitable V100 nodes and request full nodes with 80 CPUs and 4 GPUs, even when workloads require fewer resources. Such practices result in idle CPUs and GPUs during DNN training workloads. A critical question arises: Can runtime and memory requirements for DNN workloads be accurately estimated to enable efficient resource allocation and support sustainable AI solutions?

Runtime estimation for DNN workloads is challenging, as it is an NP-hard problem influenced by hardware, runtime environments, training data, model complexity, and hyperparameters. While black-box resource estimators provide application-specific predictions, they struggle to generalize to new DNN architectures without substantial training data. Similarly, synthetic data generation can mitigate data scarcity, but ensuring efficiency and realism in emulated data is a significant hurdle. Additionally, understanding the reusability of emulations across diverse configurations remains an open problem.

We propose two approaches for DNN resource prediction: Black-Box and White-Box. The Black-Box approach uses tabular features, such as hardware specifications, hyperparameters, input metadata, and handcrafted model features, enabling faster training with traditional regression models. However, it requires extensive training data and does not scale well to novel architectures. In contrast, the White-Box approach extracts graph-based features from High-Level Optimized (HLO) Graphs, eliminating the dependency on handcrafted features. While adaptable to new DNN layers, this approach incurs additional overhead as it requires hardware-based feature extraction during model inference.

Experiments using these approaches demonstrate complementary strengths. When sufficient training data is available, traditional regressors like XGBoost outperform Graph Neural Network (GNN)-based models, achieving a Mean Absolute Percentage Error (MAPE) of 10% compared to GNN's 13.54%. However, in data-limited scenarios, combining GNN output with XGBoost in a pipelined fashion significantly reduces MAPE for fit time estimation from 75.48% to 10.55%. This demonstrates the effectiveness of hybrid approaches in improving prediction accuracy, even with constrained training data. These findings highlight the potential of combining Black-Box and White-Box methodologies for efficient and sustainable resource prediction in AI-driven HPC environments.

Contributors:

Format

On DemandOn Site