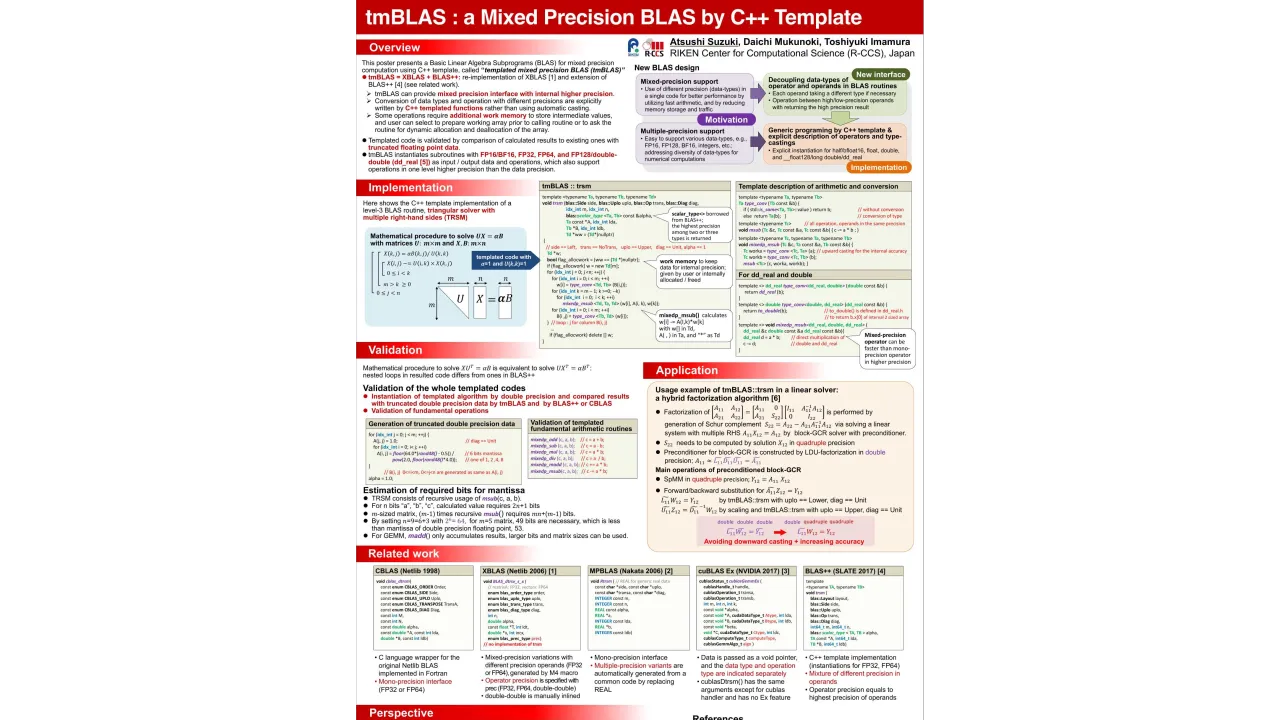

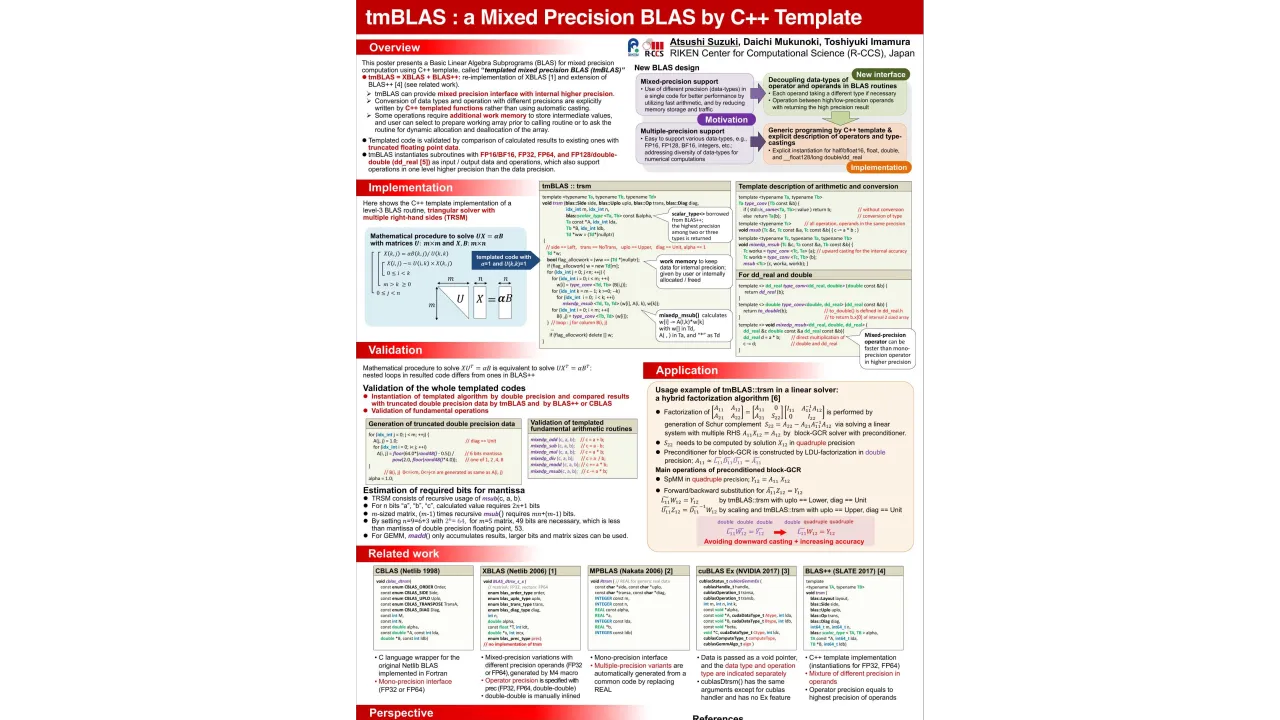

tmBLAS: A Mixed Precision BLAS by C++ Template

Monday, May 22, 2023 3:00 PM to Wednesday, May 24, 2023 5:00 PM · 2 days 2 hr. (Europe/Berlin)

Foyer D-G - 2nd Floor

Research Poster

Mixed Precision AlgorithmsNumerical Libraries

Information

Numerical computations have been using only FP32 or FP64 precision floating-point formats defined by IEEE 754. But, nowadays, various formats are introduced, for example, FP16 and FP128 were added to IEEE 754-2008. Mixed precision computations have been hot topics to achieve faster and more energy saving calculations by using lower precision as much as possible in addition to the standard precision. However, many of existing libraries and, especially for linear algebra operations, BLAS can perform computations by one of FP32 or FP64, exclusively. Now several implementations of the BLAS library are developed to support multiple precision and mixed precision arithmetic, e.g., XBLAS, BLAS++, and MPBLAS, but it still is required that can control mixed precision arithmetic for both data and operations.

We are developing a mixed precision BLAS using C++ template, called tmBLAS, to support combinations of precision for input/output arguments and internal arithmetic operations. Our implementation is based on BLAS++ code using explicit descriptions of operators and the data type of operands to exclude implicit conversions of data. It can provide mixed precision arithmetic with internal higher precision, however, which requires an additional working array to store intermediate values for calculation and loop exchanges to minimize usage of such an array. We have established a validation technique to ensure newly constructed loop structure being equivalent to original one using sampling results by truncated double precision data that make successive operators commutative. We will show an example of a triangle solution in mixed precision used in a specialized linear solver.

Contributors:

Contributors:

Format

On-site

Beginner Level

30%

Intermediate Level

50%

Advanced Level

20%