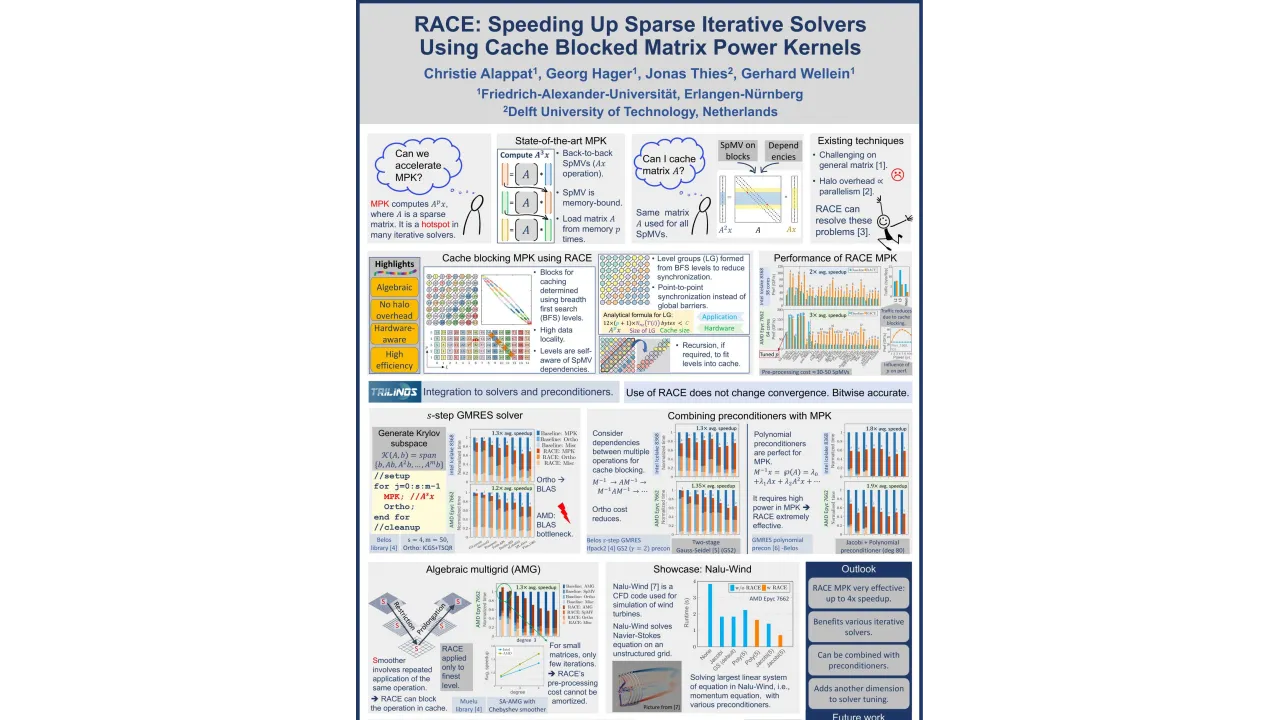

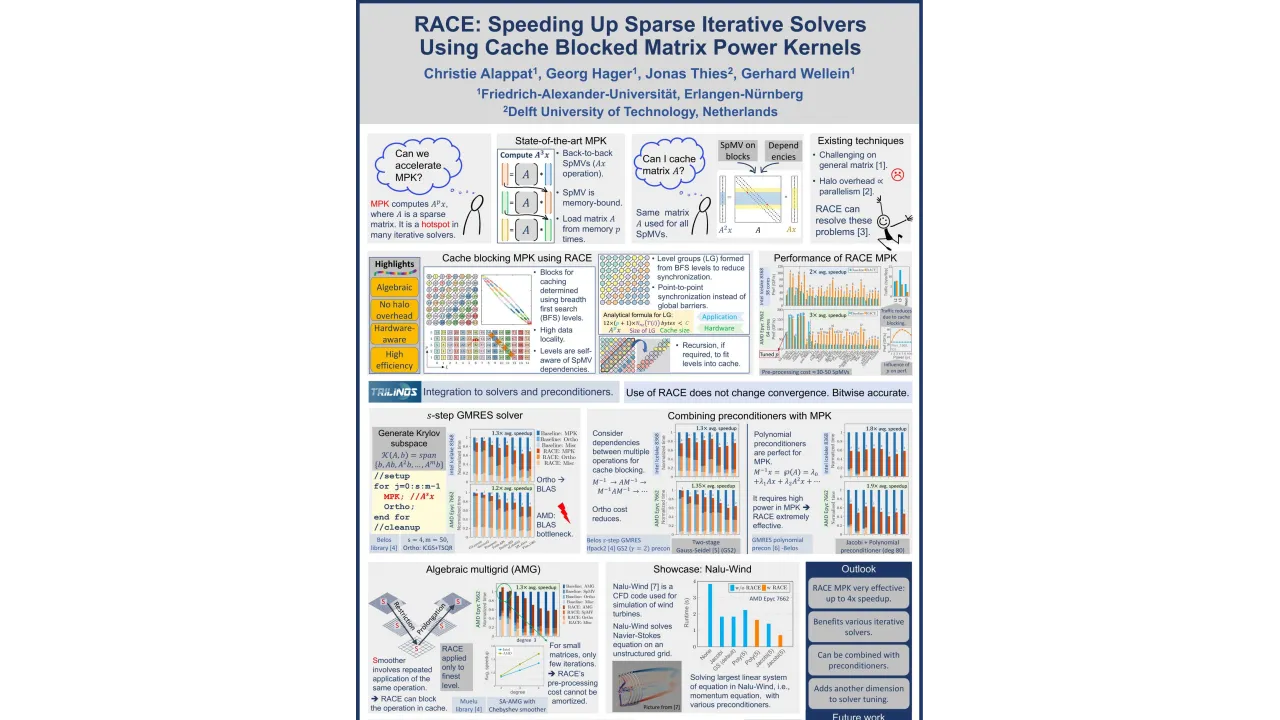

BEST POSTER FINALIST: RACE: Speeding Up Sparse Iterative Solvers Using Cache Blocked Matrix Power Kernels

Monday, May 22, 2023 3:00 PM to Wednesday, May 24, 2023 5:00 PM · 2 days 2 hr. (Europe/Berlin)

Foyer D-G - 2nd Floor

Research Poster

Numerical LibrariesPerformance Modeling and Tuning

Information

Sparse linear iterative solvers are essential for large-scale simulations. Often in many of these simulations, the majority of the time is spent in matrix power kernels (MPK), which compute the product between a power of a sparse matrix A and a dense vector x, i.e., A^{p}x. Current state-of-the-art implementations perform MPK by calling repeated back-to-back sparse matrix-vector multiplications (SpMV), which requires to stream the large A matrix from the main memory p times. Using RACE, we can accelerate the MPK computations by keeping parts of the matrix A in cache for successive SpMV calls. RACE uses a level-based approach to achieve this: Levels are constructed using breadth-first search on the graph related to the underlying sparse matrix. These levels are then used to implement cache blocking of the matrix elements for high spatial and temporal reuse. The approach is highly efficient and achieves performance levels of 50-100 GF/s on a single modern Intel or AMD multicore chip, providing speedups of typically 2x-4x compared to a highly optimized classical SpMV implementation.

After demonstrating RACE’s cache blocking approach, the poster sheds light on the application of the cache-blocked MPK kernels in iterative solvers. We discuss the benefit of integrating RACE library to Trilinos framework and demonstrate the speedups achieved in communication-avoiding s-step Krylov solvers, polynomial preconditioners, and algebraic multigrid (AMG) preconditioners. The poster concludes by showcasing the application of RACE-accelerated solvers in a real-world wind turbine simulation (Nalu-Wind) and highlights the new possibilities and perspectives opened up by RACE’s cache blocking technique.

Contributors:

Contributors:

Format

On-site

Beginner Level

30%

Intermediate Level

50%

Advanced Level

20%