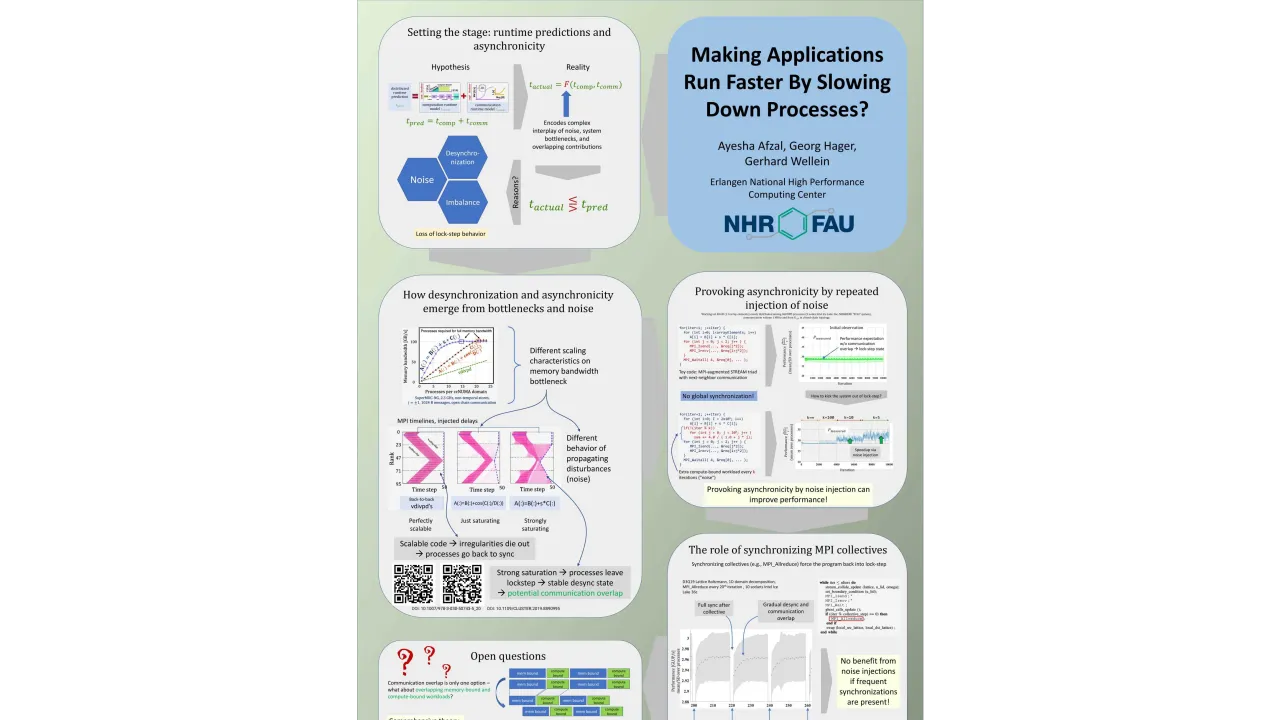

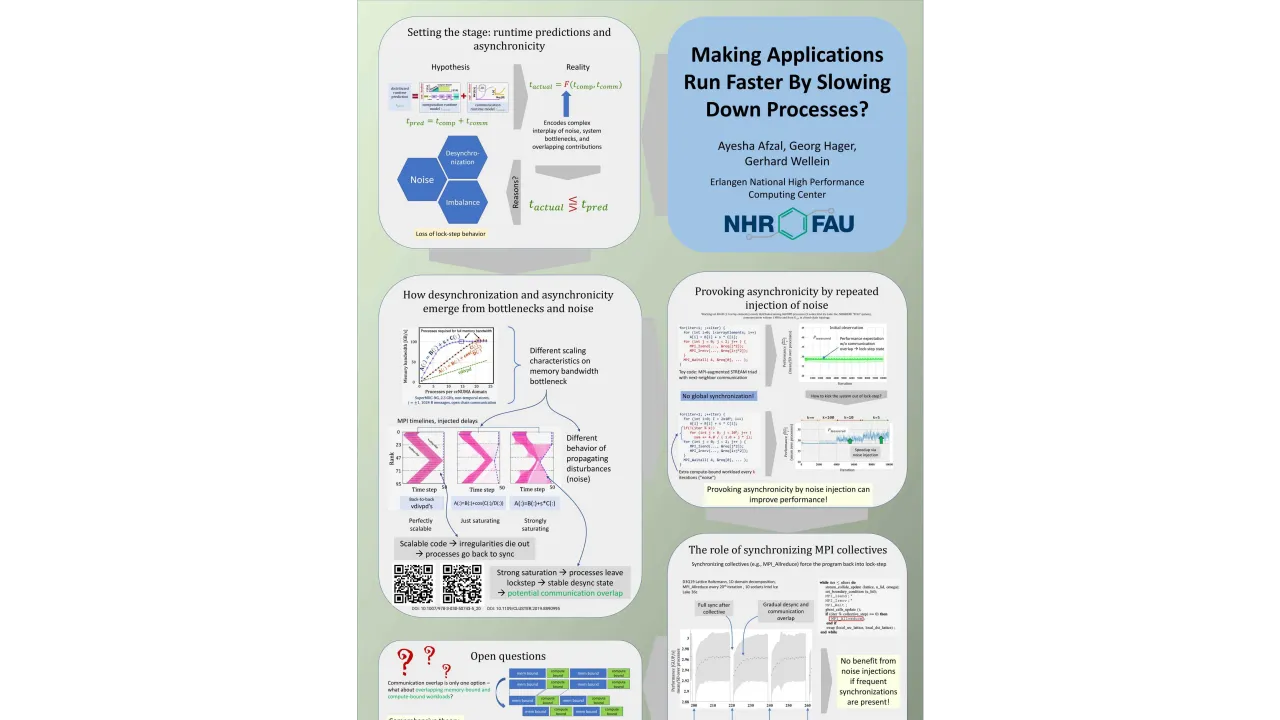

Making Applications Run Faster by Slowing Down Processes?

Monday, May 22, 2023 3:00 PM to Wednesday, May 24, 2023 5:00 PM · 2 days 2 hr. (Europe/Berlin)

Foyer D-G - 2nd Floor

Research Poster

Performance Modeling and Tuning

Information

MPI-parallel memory-bound programs show peculiar behavior when subjected to disturbances such as system noise, application noise, or imbalances. The typical "lock-step" pattern that many numerical codes exhibit can dissolve, leading to a desynchronized state in which computation overlaps naturally with communication. This means that a simple addition of (modeled or measured) execution time and (modeled or measured) communication time may not be a good prediction for the overall program runtime. Surprisingly, performance may even increase with respect to the synchronized state since the memory bandwidth bottleneck is partially evaded and communication overhead is hidden. Although this transition can occur spontaneously, it can be provoked or accelerated by deliberately and randomly injecting delays which "kick" the program out of lock-step. The temporary loss in performance caused by the injection is overcompensated by improved resource utilization via bottleneck evasion and communication-computation overlap. We demonstrate this mechanism using a prototypical toy code that combines memory-bound, streaming data access with next-neighbor communication. We further show, using a D3Q19 Lattice-Boltzmann (LBM) code, that synchronizing collectives such as MPI_Allreduce (unsurprisingly) destroy the desynchronized state. However, the program can fall back into desynchronization automatically. This means that even strongly synchronizing collectives may not be detrimental to bottleneck evasion if used sparingly. Our research raises interesting questions about parallel program dynamics and whether it is always effective to balance the workload or mitigate noise under all circumstances.

Contributors:

Contributors:

Format

On-site

Beginner Level

10%

Intermediate Level

90%